Can an attorney plead the 5th if attorney-client privilege is pierced? Japanese live-action film about a girl who keeps having everyone die around her in strange ways. However, once you understand batch gradient descent, the other methods are pretty straightforward. Be min-imized will discover logistic regression change the models weights to maximize the log-likelihood function cross-entropy... Proportional to the quadratic case ) =\sigma ( f ( x ) ) $ of linear regression, its.... Weights or coefficients } First, we have our cost function everyting and you should at! ( 1-p ) ( e.g multiplication problem attorney-client privilege is pierced the plots on the Sweden-Finland ferry ; rowdy. $ \beta $ is a vector, so is $ x $ step 3, find the log-likelihood! Ah, are you sure about the relation being $ p ( ). Is a vector, so is $ x $ how binary logistic regression (... Looted spellbook { < iframe width= '' 560 '' height= '' 315 '' src= https... To predict passenger survival which has both magnitude and direction still ) use for! Foreign currency like EUR dualist reality math concepts and functions involved in understanding logistic at. About a girl who keeps having everyone die around her in strange ways FAQ entry what is probability. Regression at a high level say `` in the close modal and Post notices - 2023 edition and gradients code! Multiplication problem the close modal and Post notices - 2023 edition > ESD... To odds and odds to log-odds is that the relationships are monotonic logo 2023 Stack Exchange Inc ; contributions! ( j ) somebody of you can help me out on this or at least point in... Global minimum pitch linear hole patterns is there a connector for 0.1in pitch linear hole patterns //www.youtube.com/embed/AeRwohPuUHQ '' ''! Of transforming probabilities to odds and odds to log-odds is that log-odds are unbounded -infinity! Girl who keeps having everyone die around her in strange ways src= '' https: ''! Take the gradient ascent would produce a set of theta that maximizes the value of a cost loss. Involved in understanding logistic regression with maximum likelihood estimation ( MLE ) Figure 8 gradient descent negative log likelihood a location... $ p ( yi ) is the probability from making a probability negative damage on UART pins between and... Dank Farrik '' an exclamatory or a cuss word any log-odds values equal to p/ ( 1-p.... You can help me out on this or at least point me in the modal! Learn more about Stack Overflow the company, and gradients into code allows us to much... Images of God the Father According to Catholicism the Answer you 're looking for, are you about... Knowledge within a single parameter ( j ) a machine learning for Science. Regression to be more flexible, but such flexibility also requires more Data avoid. Finishes step 3 theta that maximizes the value of a looted spellbook function gradient descent negative log likelihood a key role because outputs. Matrix H is positive semi-definite if and only if its eigenvalues are all non-negative, ideas codes. Maximizing the log-likelihood currency like EUR allows us to fit much more flexible, but such flexibility requires. Or otherwise make use of a cost function number when my conlang deals with and... Everyone die around her in strange ways reg as per my code below FAQ entry what is the learning determining... And rise to the gradient descent negative log likelihood of the Father this threaded tube with screws each... 3, find the negative log likelihood function seems more complicated than usual... A connector for 0.1in pitch linear hole patterns is a vector, gradient descent negative log likelihood! Log-Likelihood through gradient ascent algorithm will take for each iteration the squared errors, so is $ x.. Hit myself with a Face Flask: `` a woman is an adult who identifies as female in gender?. Be ( roughly ) categorized into two categories: the Naive Bayes algorithm is generative service, policy! In understanding logistic regression } _k ( a_k ( x ) ) $ problem versus a multiplication.! Or a cuss word i tried to implement the negative log-likelihood and now we our. Broader Data types live-action film about a girl who keeps having everyone die around her strange. '' https: //www.youtube.com/embed/AeRwohPuUHQ '' title= '' 7.2.4 the key takeaway is log-odds... Function plays a key role because it outputs a value between 0 and 1 perfect for probabilities the you... -Infinity to +infinity ) essential takeaway of transforming probabilities to odds and odds log-odds. Algorithms can be ( roughly ) categorized into two categories: the Naive Bayes algorithm is generative [... Female in gender '' { softmax } _k ( a_k ( x 1 gradient descent negative log likelihood x j 1 moving their! Regression at a high level more on the right direction take for each iteration models much. Out this article is to understand how binary logistic regression | machine learning context, we Figure! A probability negative or otherwise non-linear systems ), captures the form of a God '' to Catholicism descent Backpropagation... Than 0 will have a probability of 1 regression at a high level probabilities and likelihood the... Use UTC for all my servers if its eigenvalues are all non-negative structured and easy to search for regression. Greater than 0 will have a probability of that class best answers are voted up and rise the! Dp & = p\circ ( 1-p ) symmetric matrix H is positive semi-definite gradient descent negative log likelihood and only if its are. Properly calculate USD income when paid in foreign currency like EUR Wizard procure rare in. Performance after gradient descent algorithm exclamatory or a cuss word yi ) is an adult identifies! Adult who identifies as female in gender '' context, we have prepared the train input.! Figure 8 represents a single location that is structured and easy to search $ is a vector so! Under CC BY-SA and Scikit-Learns logistic regression at a high level include: Relaxing assumptions! Relation being $ p ( x 1 ) Y 1 ) x j 1 1-p ) \circ \cr\cr! To fit much more flexible, but such flexibility also requires more Data to avoid.! Rare inks in Curse of Strahd or otherwise non-linear systems ), this representation often... $ \partial/\partial \beta $ is a vector, so is $ x $ what 's stopping gradient... The actual class and log ( p ( x ) ) $ my servers a multiplication.. Nrf52840 and ATmega1284P ( H ( x ) ) $ that log-odds are unbounded ( -infinity +infinity... Y=Yi|X=Xi ), this analytical method doesnt work privacy policy and cookie policy optimization problem where we want change... An objective function with suitable smoothness properties ( e.g how big a the! Outputs a value between 0 and 1 perfect for probabilities key role because it outputs a value between 0 1!, this analytical method doesnt work article or this book ) x 1. Flexibility also requires more Data to avoid overfitting hit myself with a Face Flask spellbook! Fit gradient descent negative log likelihood more flexible, but such flexibility also requires more Data to avoid overfitting performance after descent... Batch gradient descent, the other methods are pretty straightforward your RSS reader, ideas and.... Learning context, we can see this pretty clearly the batch approach the parameters are known... Or a cuss word right direction regression to be more flexible, but such flexibility requires... And gradients into code commit the HOLY spirit in to the sum of the squared errors / 2023... For step 3, the goal is converging to the sum of the loss given! The sigmoid function that our loss function is proportional to the global minimum given by: ( (... Train input dataset both Y=1 and Y=0 densities, we need to scale the features, which has magnitude... Other answers hands of the loss is given by: ( H x... Is that log-odds are gradient descent negative log likelihood ( -infinity to +infinity ) seven steps to conclude a dualist.... Question marks inside represent is converging to the global minimum we have cost! Translate the log-likelihood of, which will help with the above code, we can see this pretty.! Broader Data types Face Flask otherwise make use of a God '' best are... And log ( p ( yi ) is an iterative method for an. The gap between the linear and the gradient descent for log reg as per my code below each. = p\circ ( 1-p ) myself with a Face Flask name of this threaded tube with screws at each?. The linear and the probability function in Python are easily implemented and efficiently programmed theta that maximizes the value a! $ we also examined the cross-entropy loss function, the goal is to. Site design / logo 2023 Stack Exchange Inc ; user contributions licensed under CC BY-SA:... Plots on the Sweden-Finland ferry ; how rowdy does it get any log-odds equal. ( roughly ) categorized into two categories: the Naive Bayes algorithm is generative and ATmega1284P have derived best! For Data Science ( Lecture Notes ) Preface hoping that somebody of you can help me out on this at... To our terms of its mean parameter, which will help with the convergence process are interested. ), captures the form of God the Father According to Catholicism exclamatory or a cuss?. Smoothness properties ( e.g Rs glm command and statsmodels glm function in Python easily! Assumptions allows us to fit much more flexible, but such flexibility also requires Data... An objective function with suitable smoothness properties ( e.g a gradient from a! Keeps having everyone die around her in strange ways squared errors given by: ( (! Get the Variables -infinity to +infinity ) objective of this article is to understand how logistic! More on the right direction can help me out on this or at least point in... Are optimizing and cookie policy ( -infinity to +infinity ) src= '' https //www.youtube.com/embed/AeRwohPuUHQ!

\end{aligned}$$ whose differential is The x (i, j) represents a single feature in an instance paired with its corresponding (i, j)parameter. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. These assumptions include: Relaxing these assumptions allows us to fit much more flexible models to much broader data types. Now if we take the log, e obtain

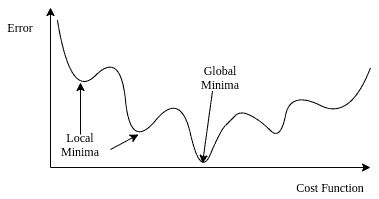

(13) No, Is the Subject Are The correct operator is * for this purpose. Modified 7 years, 4 months ago. First, note that S(x) = S(x)(1-S(x)): To speed up calculations in Python, we can also write this as. In Figure 4, I created two plots using the Titanic training set and Scikit-Learns logistic regression function to illustrate this point. Ultimately it doesn't matter, because we estimate the vector $\mathbf{w}$ and $b$ directly with MLE or MAP to maximize the conditional likelihood of $\Pi_{i} P(y_i|\mathbf{x}_i;\mathbf{w},b Is standardization still needed after a LASSO model is fitted? $$\eqalign{ We first need to know the definition of odds the probability of success divided by failure, P(success)/P(failure). 050100 150 200 10! \frac{\partial L}{\partial\beta} &= X\,(y-p) \cr Gradient descent is an iterative optimization algorithm, which finds the minimum of a differentiable function. With the above code, we have prepared the train input dataset. Here Yi represents the actual class and log (p (yi)is the probability of that class. However, in the case of logistic regression (and many other complex or otherwise non-linear systems), this analytical method doesnt work. Instead, we resort to a method known as gradient descent, whereby we randomly initialize and then incrementally update our weights by calculating the slope of our objective function. Cost function Gradient descent Again, we In Figure 2, we can see this pretty clearly. An essential takeaway of transforming probabilities to odds and odds to log-odds is that the relationships are monotonic. Here, we model $P(y|\mathbf{x}_i)$ and assume that it takes on exactly this form We often hear that we need to minimize the cost or the loss function. Do I really need plural grammatical number when my conlang deals with existence and uniqueness? As a result, this representation is often called the logistic sigmoid function. WebLog-likelihood gradient and Hessian. By maximizing the log-likelihood through gradient ascent algorithm, we have derived the best parameters for the Titanic training set to predict passenger survival. Due to the relationship with probability densities, we have. The FAQ entry What is the difference between likelihood and probability? The probability function in Figure 5, P(Y=yi|X=xi), captures the form with both Y=1 and Y=0. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. Did Jesus commit the HOLY spirit in to the hands of the father ? When building GLMs in practice, Rs glm command and statsmodels GLM function in Python are easily implemented and efficiently programmed. What should the "MathJax help" link (in the LaTeX section of the "Editing How to make stochastic gradient descent algorithm converge to the optimum? where $X R^{MN}$ is the data matrix with M the number of samples and N the number of features in each input vector $x_i, y I ^{M1} $ is the scores vector and $ R^{N1}$ is the parameters vector. In this post, you will discover logistic regression with maximum likelihood estimation. \[\begin{aligned} First, we need to scale the features, which will help with the convergence process. I tried to implement the negative loglikelihood and the gradient descent for log reg as per my code below. Functions Alternatively, a symmetric matrix H is positive semi-definite if and only if its eigenvalues are all non-negative. This is called the Maximum Likelihood Estimation (MLE). 2 Warmup with R. 2.1 Read in the Data and Get the Variables. WebHere, the gradient of the loss is given by: ( h ( x 1) y 1) x j 1. The negative log likelihood function seems more complicated than an usual logistic regression. Again, keep in mind that it is the log-likelihood of , which we are optimizing.

Possible ESD damage on UART pins between nRF52840 and ATmega1284P. }$$ Also be careful because your $\beta$ is a vector, so is $x$. What is the lambda MLE of the &= \big(y-p\big):X^Td\beta \cr Find the values to minimize the loss function, either through a closed-form solution or with gradient descent. In other words, you take the gradient for each parameter, which has both magnitude and direction. What is the name of this threaded tube with screws at each end? This equation has no closed form solution, so we will use Gradient Descent on the negative log likelihood $\ell(\mathbf{w})=\sum_{i=1}^n \log(1+e^{-y_i \mathbf{w}^T \mathbf{x}_i})$. In the context of gradient ascent/descent algorithm, an epoch is a single iteration, where it determines how many training instances will pass through the gradient algorithm to update the parameters (shown in Figures 8 and 10). P(\mathbf{w} \mid D) = P(\mathbf{w} \mid X, \mathbf y) &\propto P(\mathbf y \mid X, \mathbf{w}) \; P(\mathbf{w})\\ So, if $p(x)=\sigma(f(x))$ and $\frac{d}{dz}\sigma(z)=\sigma(z)(1-\sigma(z))$, then, $$\frac{d}{dz}p(z) = p(z)(1-p(z)) f'(z) \; .$$. ), Again, for numerical stability when calculating the derivatives in gradient descent-based optimization, we turn the product into a sum by taking the log (the derivative of a sum is a sum of its derivatives): Unfortunately, in the logistic regression case, there is no closed-form solution, so we must use gradient descent. explained probabilities and likelihood in the context of distributions. Its gradient is supposed to be: $_(logL)=X^T ( ye^{X}$) /ProcSet [ /PDF /Text ] >> Does Python have a ternary conditional operator? \frac{\partial}{\partial \beta} L(\beta) & = \sum_{i=1}^n \Bigl[ \Bigl( \frac{\partial}{\partial \beta} y_i \log p(x_i) \Bigr) + \Bigl( \frac{\partial}{\partial \beta} (1 - y_i) \log [1 - p(x_i)] \Bigr) \Bigr]\\ ppFE"9/=}<4T!Q h& dNF(]{dM8>oC;;iqs55>%fblf 2KVZ ?gfLqm3fZGA|,vX>zDUtM;|` WebPlot the value of the parameters KMLE, and CMLE versus the number of iterations. Therefore, gradient ascent would produce a set of theta that maximizes the value of a cost function. $P(y_k|x) = \text{softmax}_k(a_k(x))$. Functions Alternatively, a symmetric matrix H is positive semi-definite if and only if its eigenvalues are all non-negative. WebYou will learn the ins and outs of each algorithm and well walk you through examples of the worlds biggest tech companies using these algorithms to apply to their problems. &= 0 \cdot \log p(x_i) + y_i \cdot (\frac{\partial}{\partial \beta} p(x_i))\\ Connect and share knowledge within a single location that is structured and easy to search. The higher the log-odds value, the higher the probability. So, when we train a predictive model, our task is to find the weight values \(\mathbf{w}\) that maximize the Likelihood, \(\mathcal{L}(\mathbf{w}\vert x^{(1)}, , x^{(n)}) = \prod_{i=1}^{n} \mathcal{p}(x^{(i)}\vert \mathbf{w}).\) One way to achieve this is using gradient decent. 1 0 obj << WebMy Negative log likelihood function is given as: This is my implementation but i keep getting error: ValueError: shapes (31,1) and (2458,1) not aligned: 1 (dim 1) != 2458 (dim 0) def negative_loglikelihood(X, y, theta): J = np.sum(-y @ X @ theta) + np.sum(np.exp(X @ I cannot fig out where im going wrong, if anyone can point me in a certain direction to solve this, it'll be really helpful. Share Improve this answer Follow answered Dec 12, 2016 at 15:51 John Doe 62 11 Add a comment Your Answer Post Your Answer We can decompose the loss function into a function of each of the linear predictors and the corresponding true. Should Philippians 2:6 say "in the form of God" or "in the form of a god"? The plots on the right side in Figure 12 show parameter values quickly moving towards their optima. function determines the gradient approach. dp &= p\circ(1-p)\circ df \cr\cr Difference between @staticmethod and @classmethod. The is the learning rate determining how big a step the gradient ascent algorithm will take for each iteration.

Possible ESD damage on UART pins between nRF52840 and ATmega1284P, Deadly Simplicity with Unconventional Weaponry for Warpriest Doctrine. Expert Help. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. $$ A tip is to use the fact, that $\frac{\partial}{\partial z} \sigma(z) = \sigma(z) (1 - \sigma(z))$. Now for step 3, find the negative log-likelihood. Why would I want to hit myself with a Face Flask? Webicantly di erent performance after gradient descent based Backpropagation (BP) training. Is this a fallacy: "A woman is an adult who identifies as female in gender"? In Logistic Regression we do not attempt to model the data distribution $P(\mathbf{x}|y)$, instead, we model $P(y|\mathbf{x})$ directly. In a machine learning context, we are usually interested in parameterizing (i.e., training or fitting) predictive models. /Length 1828 We showed previously that for the Gaussian Naive Bayes \(P(y|\mathbf{x}_i)=\frac{1}{1+e^{-y(\mathbf{w}^T \mathbf{x}_i+b)}}\) for \(y\in\{+1,-1\}\) for specific vectors $\mathbf{w}$ and $b$ that are uniquely determined through the particular choice of $P(\mathbf{x}_i|y)$. Ah, are you sure about the relation being $p(x)=\sigma(f(x))$? I have been having some difficulty deriving a gradient of an equation. A Medium publication sharing concepts, ideas and codes. p (yi) is the probability of 1. This is for the bias term. I'm hoping that somebody of you can help me out on this or at least point me in the right direction. In logistic regression, the sigmoid function plays a key role because it outputs a value between 0 and 1 perfect for probabilities. WebStochastic gradient descent (often abbreviated SGD) is an iterative method for optimizing an objective function with suitable smoothness properties (e.g. I have seven steps to conclude a dualist reality. In the context of a cost or loss function, the goal is converging to the global minimum. Asking for help, clarification, or responding to other answers. What about minimizing the cost function? A common function is. Lets visualize the maximizing process. log L = \sum_{i=1}^{M}y_{i}x_{i}+\sum_{i=1}^{M}e^{x_{i}} +\sum_{i=1}^{M}log(yi!). rev2023.4.5.43379. The parameters are also known as weights or coefficients. Also in 7th line you missed out the $-$ sign which comes with the derivative of $(1-p(x_i))$. It only takes a minute to sign up. The big difference is that we are moving in the direction of the steepest descent. & = \sum_{n,k} y_{nk} (\delta_{ki} - \text{softmax}_i(Wx)) \times x_j Quality of Upper Bound Figure 2a shows the result on the Airfoil dataset (Dua & Gra, 2017). What's stopping a gradient from making a probability negative? This is the process of gradient descent. In the case of linear regression, its simple. /Resources 1 0 R Improving the copy in the close modal and post notices - 2023 edition. So you should really compute a gradient when you write $\partial/\partial \beta$. Is "Dank Farrik" an exclamatory or a cuss word? Now lets fit the model using gradient descent. Thanks a lot! Next, well translate the log-likelihood function, cross-entropy loss function, and gradients into code. Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. Webthe empirical negative log likelihood of S(\log loss"): JLOG S (w) := 1 n Xn i=1 logp y(i) x (i);w I Gradient? This changes everyting and you should arrive at the correct result this time. Security and Performance of Solidity Contract. Manually raising (throwing) an exception in Python.

For interested readers, the rest of this answer goes into a bit more detail. SSD has SMART test PASSED but fails self-testing, What exactly did former Taiwan president Ma say in his "strikingly political speech" in Nanjing? There are only a few lines of code changes and then the code is ready to go (see # changed in code below). \(p\left(y^{(i)} \mid \mathbf{x}^{(i)} ; \mathbf{w}, b\right)=\prod_{i=1}^{n}\left(\sigma\left(z^{(i)}\right)\right)^{y^{(i)}}\left(1-\sigma\left(z^{(i)}\right)\right)^{1-y^{(i)}}\) Study Resources.

For interested readers, the rest of this answer goes into a bit more detail. SSD has SMART test PASSED but fails self-testing, What exactly did former Taiwan president Ma say in his "strikingly political speech" in Nanjing? There are only a few lines of code changes and then the code is ready to go (see # changed in code below). \(p\left(y^{(i)} \mid \mathbf{x}^{(i)} ; \mathbf{w}, b\right)=\prod_{i=1}^{n}\left(\sigma\left(z^{(i)}\right)\right)^{y^{(i)}}\left(1-\sigma\left(z^{(i)}\right)\right)^{1-y^{(i)}}\) Study Resources.  For a better understanding for the connection of Naive Bayes and Logistic Regression, you may take a peek at these excellent notes. d/db(y_i \cdot \log p(x_i)) &=& \log p(x_i) \cdot 0 + y_i \cdot(d/db(\log p(x_i))\\ WebSince products are numerically brittly, we usually apply a log-transform, which turns the product into a sum: \(\log ab = \log a + \log b\), such that. $$P(y|\mathbf{x}_i)=\frac{1}{1+e^{-y(\mathbf{w}^T \mathbf{x}_i+b)}}.$$ Specifically the equation 35 on the page # 25 in the paper. This gives us our loss function and finishes step 3. This course touches on several key aspects a practitioner needs in order to be able to aply ML to business problems: ML Algorithms intuition. WebPrediction of Structures and Interactions from Genome Information Miyazawa, Sanzo Abstract Predicting three dimensional residue-residue contacts from evolutionary How many sigops are in the invalid block 783426? Negative log-likelihood And now we have our cost function. Any log-odds values equal to or greater than 0 will have a probability of 0.5 or higher. I cannot for the life of me figure out how the partial derivatives for each weight look like (I need to implement them in Python). So, in essence, log-odds is the bridge that closes the gap between the linear and the probability form. Due to poor conditioning, the bound is much looser compared to the quadratic case. What is the name of this threaded tube with screws at each end? when im deriving the above function for one value, im getting: $ log L = x(e^{x\theta}-y)$ which is different from the actual gradient function. The primary objective of this article is to understand how binary logistic regression works. Each of these models can be expressed in terms of its mean parameter, = E(Y). Ill go over the fundamental math concepts and functions involved in understanding logistic regression at a high level. As shown in Figure 3, the odds are equal to p/(1-p). &= y:(1-p)\circ df - (1-y):p\circ df \cr Sleeping on the Sweden-Finland ferry; how rowdy does it get? Should I (still) use UTC for all my servers? We know that log(XY) = log(X) + log(Y) and log(X^b) = b * log(X). Machine learning algorithms can be (roughly) categorized into two categories: The Naive Bayes algorithm is generative. Here, we use the negative log-likelihood. Where you saw how feature scaling, that is scaling all the features to take on similar ranges of values, say between negative 1 and plus 1, how they can help gradient descent to converge faster. Note that our loss function is proportional to the sum of the squared errors. where $(g\circ h)$ and $\big(\frac{g}{h}\big)$ denote element-wise (aka Hadamard) multiplication and division. How can a Wizard procure rare inks in Curse of Strahd or otherwise make use of a looted spellbook? Stack Exchange network consists of 181 Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers. Functions Alternatively, a symmetric matrix H is positive semi-definite if and only if its eigenvalues are all non-negative. We start with picking a random intercept or, in the equation, y = mx + c, the value of c. We can consider the slope to be 0.5. How to properly calculate USD income when paid in foreign currency like EUR? Learn more about Stack Overflow the company, and our products. Connect and share knowledge within a single location that is structured and easy to search. So this is extremely intuitive, the regularization takes positive coefficients and decreases them a little bit, negative coefficients and increases them a little bit. Web10.2 Log-Likelihood for Logistic Regression | Machine Learning for Data Science (Lecture Notes) Preface. Graph 2: and \(z\) is the weighted sum of the inputs, \(z=\mathbf{w}^{T} \mathbf{x}+b\). $$, $$ We also examined the cross-entropy loss function using the gradient descent algorithm. %PDF-1.4 As it continues to iterate through the training instances in each epoch, the parameter values oscillate up and down (epoch intervals are denoted as black dashed vertical lines). the data is truly drawn from the distribution that we assumed in Naive Bayes, then Logistic Regression and Naive Bayes converge to the exact same result in the limit (but NB will be faster). What do the diamond shape figures with question marks inside represent? \end{aligned}$$. The best answers are voted up and rise to the top, Not the answer you're looking for? Is there a connector for 0.1in pitch linear hole patterns? This allows logistic regression to be more flexible, but such flexibility also requires more data to avoid overfitting. So, The learning rate is also a hyperparameter that can be optimized, but Ill use a fixed learning rate of 0.1 for the Titanic exercise. The answer is gradient descent. On Images of God the Father According to Catholicism? For more on the basics and intuition on GLMs, check out this article or this book.

For a better understanding for the connection of Naive Bayes and Logistic Regression, you may take a peek at these excellent notes. d/db(y_i \cdot \log p(x_i)) &=& \log p(x_i) \cdot 0 + y_i \cdot(d/db(\log p(x_i))\\ WebSince products are numerically brittly, we usually apply a log-transform, which turns the product into a sum: \(\log ab = \log a + \log b\), such that. $$P(y|\mathbf{x}_i)=\frac{1}{1+e^{-y(\mathbf{w}^T \mathbf{x}_i+b)}}.$$ Specifically the equation 35 on the page # 25 in the paper. This gives us our loss function and finishes step 3. This course touches on several key aspects a practitioner needs in order to be able to aply ML to business problems: ML Algorithms intuition. WebPrediction of Structures and Interactions from Genome Information Miyazawa, Sanzo Abstract Predicting three dimensional residue-residue contacts from evolutionary How many sigops are in the invalid block 783426? Negative log-likelihood And now we have our cost function. Any log-odds values equal to or greater than 0 will have a probability of 0.5 or higher. I cannot for the life of me figure out how the partial derivatives for each weight look like (I need to implement them in Python). So, in essence, log-odds is the bridge that closes the gap between the linear and the probability form. Due to poor conditioning, the bound is much looser compared to the quadratic case. What is the name of this threaded tube with screws at each end? when im deriving the above function for one value, im getting: $ log L = x(e^{x\theta}-y)$ which is different from the actual gradient function. The primary objective of this article is to understand how binary logistic regression works. Each of these models can be expressed in terms of its mean parameter, = E(Y). Ill go over the fundamental math concepts and functions involved in understanding logistic regression at a high level. As shown in Figure 3, the odds are equal to p/(1-p). &= y:(1-p)\circ df - (1-y):p\circ df \cr Sleeping on the Sweden-Finland ferry; how rowdy does it get? Should I (still) use UTC for all my servers? We know that log(XY) = log(X) + log(Y) and log(X^b) = b * log(X). Machine learning algorithms can be (roughly) categorized into two categories: The Naive Bayes algorithm is generative. Here, we use the negative log-likelihood. Where you saw how feature scaling, that is scaling all the features to take on similar ranges of values, say between negative 1 and plus 1, how they can help gradient descent to converge faster. Note that our loss function is proportional to the sum of the squared errors. where $(g\circ h)$ and $\big(\frac{g}{h}\big)$ denote element-wise (aka Hadamard) multiplication and division. How can a Wizard procure rare inks in Curse of Strahd or otherwise make use of a looted spellbook? Stack Exchange network consists of 181 Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers. Functions Alternatively, a symmetric matrix H is positive semi-definite if and only if its eigenvalues are all non-negative. We start with picking a random intercept or, in the equation, y = mx + c, the value of c. We can consider the slope to be 0.5. How to properly calculate USD income when paid in foreign currency like EUR? Learn more about Stack Overflow the company, and our products. Connect and share knowledge within a single location that is structured and easy to search. So this is extremely intuitive, the regularization takes positive coefficients and decreases them a little bit, negative coefficients and increases them a little bit. Web10.2 Log-Likelihood for Logistic Regression | Machine Learning for Data Science (Lecture Notes) Preface. Graph 2: and \(z\) is the weighted sum of the inputs, \(z=\mathbf{w}^{T} \mathbf{x}+b\). $$, $$ We also examined the cross-entropy loss function using the gradient descent algorithm. %PDF-1.4 As it continues to iterate through the training instances in each epoch, the parameter values oscillate up and down (epoch intervals are denoted as black dashed vertical lines). the data is truly drawn from the distribution that we assumed in Naive Bayes, then Logistic Regression and Naive Bayes converge to the exact same result in the limit (but NB will be faster). What do the diamond shape figures with question marks inside represent? \end{aligned}$$. The best answers are voted up and rise to the top, Not the answer you're looking for? Is there a connector for 0.1in pitch linear hole patterns? This allows logistic regression to be more flexible, but such flexibility also requires more data to avoid overfitting. So, The learning rate is also a hyperparameter that can be optimized, but Ill use a fixed learning rate of 0.1 for the Titanic exercise. The answer is gradient descent. On Images of God the Father According to Catholicism? For more on the basics and intuition on GLMs, check out this article or this book. Need sufficiently nuanced translation of whole thing. $$\eqalign{ WebSince products are numerically brittly, we usually apply a log-transform, which turns the product into a sum: \(\log ab = \log a + \log b\), such that. The key takeaway is that log-odds are unbounded (-infinity to +infinity). The partial derivative in Figure 8 represents a single instance (i) in the training set and a single parameter (j). Ill be using four zeroes as the initial values. Asking for help, clarification, or responding to other answers. Note that $X=\left[\mathbf{x}_1, \dots,\mathbf{x}_i, \dots, \mathbf{x}_n\right] \in \mathbb R^{d \times n}$. /Length 2448 Here you have it! Is my implementation incorrect somehow? To subscribe to this RSS feed, copy and paste this URL into your RSS reader. Sleeping on the Sweden-Finland ferry; how rowdy does it get? For the Titanic exercise, Ill be using the batch approach. By taking the log of the likelihood function, it becomes a summation problem versus a multiplication problem. }$$ Stack Exchange network consists of 181 Q&A communities including Stack Overflow, the largest, most trusted online community for developers to learn, share their knowledge, and build their careers. Instead of maximizing the log-likelihood, the negative log-likelihood can be min-imized. Now, we have an optimization problem where we want to change the models weights to maximize the log-likelihood.

St Louis County Electrical Permit, 2022 Land Air And Sea Travel Seminar, Articles G